Indexed In

- Open J Gate

- Genamics JournalSeek

- SafetyLit

- RefSeek

- Hamdard University

- EBSCO A-Z

- OCLC- WorldCat

- Publons

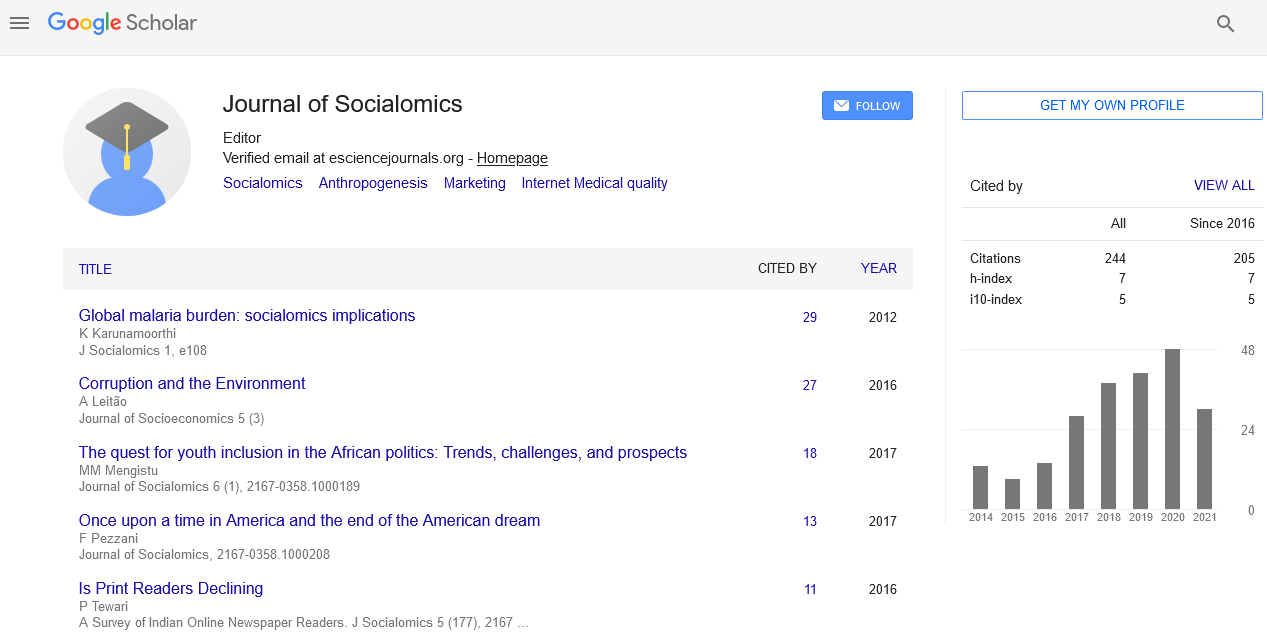

- Google Scholar

Useful Links

Share This Page

Journal Flyer

Open Access Journals

- Agri and Aquaculture

- Biochemistry

- Bioinformatics & Systems Biology

- Business & Management

- Chemistry

- Clinical Sciences

- Engineering

- Food & Nutrition

- General Science

- Genetics & Molecular Biology

- Immunology & Microbiology

- Medical Sciences

- Neuroscience & Psychology

- Nursing & Health Care

- Pharmaceutical Sciences

Perspective - (2022) Volume 11, Issue 12

Geologist's Hypotheses of Robot Knowledge Learning and Human Resource

Giuliani Wagner*Received: 25-Nov-2022, Manuscript No. JSC-22-19443; Editor assigned: 28-Nov-2022, Pre QC No. JSC-22-19443 (PQ); Reviewed: 13-Dec-2022, QC No. JSC-22-19443; Revised: 20-Dec-2022, Manuscript No. JSC-22-19443 (R); Published: 28-Dec-2022, DOI: 10.35248/2167-0358.22.11.159

Description

Social robots do not yet possess the technological capacity to function faultlessly. However, the majority of study methodologies operate under the presumption of flawless robot performance. This leads to a limited perspective where the generated circumstances are held up as the standard. Alternatives coming from unanticipated situations that arise during an experiment are frequently ignored or even disregarded. Pursuing a rigid code of conduct is inherent to thorough scientific study. Even before robots existed, the issue of human robot interaction has been explored in science fiction and academic study. Many parts of Human Resource Index (HRI) are continuations of human communications, a field of research that is far older than robots, since most of ongoing Human Resource Index (HRI) development relies on naturallanguage processing.

Although the domains of robot ethics and machine ethics are more complicated than these three rules, they give an overview of the objectives engineers and researchers hold for safety in the Human Resource Index (HRI) sector. However, when people engage with potentially hazardous robots technology, safety is often given priority. The philosophical approach of recognizing robots as moral agents (people with moral agency) and the practical method of establishing safety zones are both potential solutions to this issue. These safety zones employ technology like lidar to detect human presence or actual barriers to safeguard people by avoiding any interaction between machinery and operators.

The learning and processing block is where the heart of Human Resource Index (HRI) beats. It uses important data from the task model, interaction model, and input block for processing in order to generate control signals and carry out the intended task. Additionally, it uses a variety of computation methods, including Deep Learning (DL), neural networks, and optimization techniques, to broaden and enhance the robot's knowledge and learning in preparation for next tasks. Maintaining system stability and the robot's dynamic behaviour, which is relevant to machine learning as well, is this block's major problem. People are curious about the cause of complicated systems' failures. Depending on the definition of mistake used for the study, humans are held responsible for anywhere between 20% and 80% of system failures. Unfortunately, there is no agreement on the most accurate definition of human error among the leading scholars in the field. A typical definition of human mistake is a choice that results in an unintended, undesirable, or generally poor outcome. In reality, these mistakes can make a system less successful overall or even cause it to collapse. The field test, which was created to resemble the Mars Exploration Rover (MER) Missions that launched in the summer of 2003, concentrated on expert decision-making in robotic geology.

The three-day field test was attended by three planetary geology specialists. The field test has two parts: a mission control room and a distant field location with a simulated rover. The geologists examined the field location during the course of a sixhour simulation expedition that made up each daily field test. Every morning in the mission control room, a two-tier site was the initial data set sent to the geologists. The geologists then made requests for fresh information on the field site every two hours. The overall quantity of data that could be returned from the real Mars Exploration Rover (MER) rovers as well as the file sizes for each data category were reflected in the amount of data that the geologists were able to request. Following the mission, the geologists went to the field site to look for any discrepancies between what they had seen in the control room and what was actually there. Each new trip had a distinct field site in order to lessen biases based on data from the day before. Two methods of data collecting were employed in the field test. Recordings of the geologists' decision-making processes were made using audio and video. A transcript of the full session was made available by these two sources. Every ten minutes, the geologists were interrupted and interviewed in order to actively gather data on their actions. The geologists listed their current projects, theories, and findings throughout the interviews. A list of the geologists' hypotheses and findings from the field test, together with the data they used to support each one, were supplied by the various data sources.

Citation: Wagner G (2022) Geologist's Hypotheses of Robot Knowledge Learning and Human Resource. J Socialomics. 11:159

Copyright: © 2022 Wagner G. This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.