Indexed In

- CiteFactor

- RefSeek

- Directory of Research Journal Indexing (DRJI)

- Hamdard University

- EBSCO A-Z

- Scholarsteer

- Publons

- Euro Pub

- Google Scholar

Useful Links

Share This Page

Journal Flyer

Open Access Journals

- Agri and Aquaculture

- Biochemistry

- Bioinformatics & Systems Biology

- Business & Management

- Chemistry

- Clinical Sciences

- Engineering

- Food & Nutrition

- General Science

- Genetics & Molecular Biology

- Immunology & Microbiology

- Medical Sciences

- Neuroscience & Psychology

- Nursing & Health Care

- Pharmaceutical Sciences

Review Article - (2024) Volume 12, Issue 4

Designing a Model for Evaluation and Classification of Startup Accelerators Using Data Envelopment Analysis Method

Seyed Sharaf Hosseini Nasab*Received: 24-Jul-2020, Manuscript No. RPAM-24-5598; Editor assigned: 29-Jul-2020, Pre QC No. RPAM-24-5598 (PQ); Reviewed: 12-Aug-2020, QC No. RPAM-24-5598; Revised: 16-Aug-2024, Manuscript No. RPAM-24-5598 (R); Published: 13-Sep-2024, DOI: 10.35248/2315-7844.24.12.473

Abstract

Due to the expansion of technology use and the development of entrepreneurial culture, the growth of startups has had a significant impact on the economy. According to the Deloitte counseling survey on England and Wales data from 1871, technology suggests that technology has produced far more jobs than destroyed jobs. In addition, new businesses account for virtually the entire net creation of new jobs and 20% of gross jobs in the United States. According to other statistics, young companies have created two-thirds of the job creation, which means about 4 new jobs per company per year. Generally, companies less than a year of age have, on average, created 1.5 million jobs a year over the past three decades. Even during the economic downturn between 2006 and 2009, young and younger firms under the age of 5 and fewer than 20 employees were a net positive net income source of employment growth (8.6 percent), while larger and larger enterprises were destroying more jobs.

Keywords

Old age; Social issue; Isolation; Family response

Introduction

To create the big contribution from the involvement of the workforce in the emerging and small businesses is worthy of consideration, but despite the creation of some 450,000 companies and new businesses created annually in the United States, more of what has been created is the company. They fail and disappear and statistics show that, despite a lot of attention to startups, their success rate is not promising. Regarding the above statistics, the support and support of small and startup companies has been a serious consideration by government policy makers and private sector actors over the past decades [1]. That is why the centers of growth and support for startups have long been created, so their history dates from 1959. Since the birth of the first business growth center in New York until 1980, it reached 12 and in 1995 it increased to 600. The rapid growth of growth centers failed to provide the opportunity for new business to grow in their prime stages, especially after the collapse of the stock market bubble in the early 21st century, the weakness of the supportive model of growth centers became more apparent. In this context, the entrepreneurship ecosystem sought to create a new framework for replacing growth centers, the first American accelerator named Y Combinator, founded in 2005. Subsequently, many acceleration centers were created in the United States and elsewhere. In some reports, nearly 700 organizations in the United States were identified as accelerators/accelerators/growth centers, of which less than onethird of them could have specific requirements and conditions for an accelerator. An accelerated program, as Miller and Bond defined in their fundamental article, include five main attributes that differentiate them from other types of funding and growth centers [2].

• The free registration process is highly competitive

• Funding in part of the stock

• Emphasize and focus on startup teams instead of individuals

• Comprehensive but short-term mentoring courses

• Attention to startups instead of companies

Literature Review

Although today many accelerators have been established around the world and their number is increasing every day, only a small number of them have all of the five features mentioned above.

Therefore, it is necessary to make an appropriate regional and global assessment of accelerator performance. In spite of our pursuit of statistical centers related to entrepreneurship such as the Vice President of Science and Technology, there are currently no detailed statistics on the performance of Iranian accelerators to assess them. The main reason for this is that due to the recent development of these accelerators, the outputs of most of them have not been fruitful and have not entered the market yet. So, we focused on the world's top accelerators. The first accelerator classification in 2014 was called "Startup accelerator ranking project", which focuses on assessing the performance of American accelerators and is repeated every year. The project classifies US accelerators into five categories of platinum plus, platinum, gold, silver and bronze. The classification is based on considerations such as valuation, exit rates, total funding raised, startup success rates, founder consent and alumni network (SeedRanking.com). Although this ranking project is an invaluable attempt to prioritize accelerators, it should be noted that in reality, the performance of a system should not be limited to examining and evaluating outputs, but the inputs to outputs are required as one [3]. The most important evaluation indicators should be considered. Data coal analysis is a suitable method for this type of evaluation, so that this method determines the performance of each unit by comparing output and input values in a comprehensive way. In this paper, we combine an analysis of data coverage to prioritize the accelerator in the United States. The results of this research can be effective in many different ways in the entrepreneurial ecosystem. The first advantage of this performance appraisal is that American accelerators, as the world's first and most advanced accelerators, have always been featured in brochures and articles of entrepreneurship as examples of the new type of support model for startups, so their performance review can be a good critique. Support this new way. The second advantage of this performance assessment is that it can identify and introduce the best accelerators with respect to input and output indicators as a suitable model for Iranian accelerators. Iranian accelerators, based on some statistics, have reached 80 accelerators in recent years, can optimize their acceleration processes by benchmarking the best accelerators identified in this study. Another advantage of this ranking is for entrepreneurs and startups in order to choose the best acceleration program based on scientific and real analysis, not based on available accelerator advertisements [4].

Assessment of accelerators

In this study, we will use the efficiency criterion to evaluate the accelerators. The efficiency of a unit cannot be measured merely by considering the outputs of a unit, but it is absolutely essential to consider the inputs of a unit to measure performance. Therefore, the efficiency is the ratio of outputs to the inputs of that unit. According to this definition, in order to evaluate the efficiency of an accelerator, it is first necessary to determine its inputs and outputs and to calculate the ratio of these two to the efficiency of the accelerators. In the measurement of accelerator performance in the US startup accelerator ranking project (SeedRanking.com), only accelerator outputs are taken into account. This method cannot be a good measure for measuring performance. In this study, in addition to the outputs, we also consider accelerator inputs. Further inputs and outputs of an accelerator are described [5].

Accelerator inputs

The inputs of an accelerator are the resources required to perform an accelerator activity in order to achieve its purpose. These resources, according to various definitions, include the following:

Number of mentors: The mentor is the one who, with the necessary support, will enable the startup teams to achieve the skill and performance they need in their startup challenges and stages. Mentors are also trying to create a clear and consistent perspective for members of the group.

Number of startup funding: This item, as its name implies, includes the total number of startups funded and sponsored by an accelerator from the beginning [6].

Amount of funding per startup: Each accelerator pays a sum of money in cash and in several steps to support the startup team. This amount is determined by factors such as accelerator funds, funding policies and market average.

Total funding: It is all the investments that an accelerator has made in various startups.

Startup accelerator stocks: The agreement we have between the accelerator and the startup team, in which the starter allocates a percentage of its shares to the accelerator in exchange for the accelerator support costs and services.

Accelerator outputs

Number of exit: The risky investment process is divided into three stages before investing, after investment and exit phase. Therefore, exit is part of the entrepreneurial business process, which is very important for business owners and stakeholders in the ecosystem of the economy. Exit means that the funder or the shareholder, by examining the conditions, continues to withdraw from the business in order to continue his entrepreneurial activity and assigns his or her share to nonequity. In other words, the funder, in the stage of the business life cycle, sells its share and turns it into cash. Therefore, the accelerator output means that the accelerator, as its primary funder, has left its stock from the startup to fund in another startup [7].

Total exits: The sum of the successful exits of accelerator during a particular period. The total withdrawal includes all cash inflows from the accelerator generated by the sale of its share in the various startups.

Total funding raised: Injecting or attracting liquidity to some startups is called an increase in funding with goals such as scaling up, profitability, support or survival of the startup.

Evaluation method

In this research, Data Envelopment Analysis (DEA), a linear programming approach, has been used to evaluate American accelerators. DEA is an information-driven approach used to evaluate the performance of a number of similar units known as Decision-Maker Units (DMUs), which converts a set of input data into a set of output data. Since 1978, when DEA was introduced for the first time in the modern world, researchers have identified it as a convenient and easy way to model functional performance evaluation in different areas. DEA is widely used in many countries and in various fields to evaluate the performance of systems and units with diverse functions such as banks, cities, schools, businesses and even the performance of countries and regions.

Not only is DEA itself a way to evaluate performance, but also creates a new look at different entities that are evaluated by previous approaches. For this reason, the use of DEA in studies on the evaluation of the effectiveness of previous and future activities has also been carried out in the units to be tested. In DEA, units are divided into two types: Efficient and inefficient. This method introduces how to evaluate the evaluated units that are inefficiently detected and is a suitable method for researchers with multi-data and multi-output units.

Two general types of orientation in the development of data envelopment include direct attention to data in the input-axis method and direct attention to output in the outputdriven method [8].

In this regard, Charles Cooper and Rhodes describe the efficiency as follows:

• In the case of a data-driven model, a unit will not work if it reduces each of the data without adding other data or reducing each of the outputs. • When the output model is axial, one unit does not work if the increase of each output is possible without reducing an output or increasing an input.

Once a decision unit is effective, none of the above has happened. In this case, the performance is equal to one and less than one efficiency indicates that the linear combination of other units can produce less than the same amount of output with a smaller number of inputs that this unit is not as efficient as the definition.

Types of methods for data envelopment analysis are respectively the CCR model with assumption of return on a constant scale and BCC with assumption of return to variable scale, which is briefly explained below:

CCR model

In 1978, Charnes, Cooper and Rhodes presented a fundamental paper for the CCR model. In this way, the researcher can compare the inputs and outputs observed. In the end, we need to recognize the balanced inputs and outputs. Then the function of each unit is measured as follows:

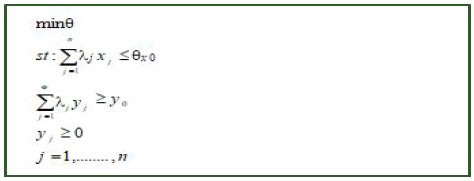

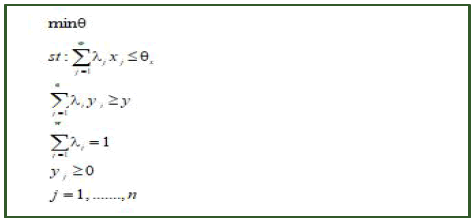

BCC model

If the returns to the scale change, the CCR method does not have performance measurement functionality. So Banker and Charnes and Cooper introduced the BCC method in 1984 to address this issue, in which the return to scale is possible and is shown as follows:

Case study

In this section, the performance of units is evaluated using the models in the previous section. In this study, we examined 59 accelerators of the most advanced American accelerators and we calculated the efficiency of these centers with 2 approaches to data envelopment analysis. These 59 accelerators are selected through the analytical network (seed-db.com). This analytical network examines the status of accelerators in the world and provides their performance in the index and outputs mentioned in the previous sections of the paper. As we focus on American accelerators, we extracted top-notch information from this website. Information on some of the indicators was not available on this website, such as the number of mentors or percentage of stocks that accelerators receive in exchange for donations, and we tried to extract this information from the official website of the accelerators. The accelerators we could not extract information about or accelerators that were not focused solely on the United States, were removed from our list. For example, some American accelerators have been removed from our list despite being listed on the selected project list because their activities are not focused solely on the United States and are also accelerating in other parts of the world [9]. These accelerators include Techstar, HAX, Healthbox, Plug and Play and Zero to 510. A comprehensive ranking of global accelerators will be explored in a different article. Table 1 shows the performance of each accelerator based on each of the two models. As a result, for each accelerator, we will have two performance values per accelerator.

| Accelerator | Efficiency | |

|---|---|---|

| First model | Second model | |

| DMU01 | 1 | 1 |

| DMU02 | 1 | 0.3408 |

| DMU03 | 1 | 1 |

| DMU04 | 1 | 0.5625 |

| DMU05 | 0.4853 | 0.2746 |

| DMU06 | 1 | 0.233 |

| DMU07 | 1 | 0.613 |

| DMU08 | 1 | 0.092 |

| DMU09 | 0.7897 | 0.5225 |

| DMU10 | 1 | 0.1902 |

| DMU11 | 1 | 1 |

| DMU12 | 0.6699 | 0.2202 |

| DMU13 | 0.2824 | 0.0521 |

| DMU14 | 1 | 0.3765 |

| DMU15 | 0.606 | 0.2511 |

| DMU16 | 0.5905 | 0.0335 |

| DMU17 | 0.913 | 0.7056 |

| DMU18 | 0.4147 | 0.2407 |

| DMU19 | 0.324 | 0.0971 |

| DMU20 | 0.2432 | 0.1102 |

| DMU21 | 1 | 0.5109 |

| DMU22 | 0.5336 | 0.2854 |

| DMU23 | 0.9242 | 0.549 |

| DMU24 | 0.6706 | 0.274 |

| DMU25 | 0.8724 | 0.4524 |

| DMU26 | 0.344 | 0.2078 |

| DMU27 | 0.719 | 0.2556 |

| DMU28 | 0.7874 | 0.0459 |

| DMU29 | 1 | 0.3596 |

| DMU30 | 1 | 0.2644 |

| DMU31 | 0.594 | 0.1402 |

| DMU32 | 0.5654 | 0 |

| DMU33 | 0.1006 | 0.0245 |

| DMU34 | 0.3729 | 0 |

| DMU35 | 0.8342 | 0.4692 |

| DMU36 | 0.1663 | 0 |

| DMU37 | 0.5587 | 0.1943 |

| DMU38 | 0.9981 | 0.3596 |

| DMU39 | 0.4703 | 0 |

| DMU40 | 0.1161 | 0 |

| DMU41 | 0.6102 | 0.454 |

| DMU42 | 0.1806 | 0 |

| DMU43 | 0.4254 | 0.0936 |

| DMU44 | 0.0631 | 0 |

| DMU45 | 0.2362 | 0.1602 |

| DMU46 | 0.3599 | 0.1602 |

| DMU47 | 0.064 | 0 |

| DMU48 | 0.0661 | 0 |

| DMU49 | 0.0435 | 0 |

| DMU50 | 0.2078 | 0.081 |

| DMU51 | 0.4325 | 0.2165 |

| DMU52 | 0.0285 | 0 |

| DMU53 | 0.787 | 0.1119 |

| DMU54 | 0.4383 | 0.0416 |

| DMU55 | 0.0328 | 0 |

| DMU56 | 0.408 | 0.0665 |

| DMU57 | 0.1454 | 0 |

| DMU58 | 0.4873 | 0.2137 |

| DMU59 | 0.2001 | 0.0794 |

Table 1: American accelerator performance.

From each of the two models, an efficiency number is obtained in evaluating the performance of each of the accelerators. The first model seeks to find the most efficient one for each accelerator. The second model seeks to increase the efficiency of all acceleration centers. But the third model identifies accelerators or accelerators that are least efficient and tries to increase their performance.

Based on the performance values of the obtained performance, it is possible to determine the rank and position of each accelerator. Naturally, due to the differences noted in the approach of each of the evaluation models, which led to differences in performance values, there could be differences in the ranking of these accelerators. Table 2 shows the rank of each accelerator for each model [10].

| Accelerator | Ranking | |

|---|---|---|

| First model | Second model | |

| DMU01 | 1 | 1 |

| DMU02 | 1 | 14 |

| DMU03 | 1 | 1 |

| DMU04 | 1 | 4 |

| DMU05 | 21 | 16 |

| DMU06 | 1 | 22 |

| DMU07 | 1 | 3 |

| DMU08 | 1 | 35 |

| DMU09 | 7 | 6 |

| DMU10 | 1 | 28 |

| DMU11 | 1 | 1 |

| DMU12 | 12 | 23 |

| DMU13 | 32 | 39 |

| DMU14 | 1 | 11 |

| DMU15 | 14 | 20 |

| DMU16 | 16 | 42 |

| DMU17 | 4 | 2 |

| DMU18 | 26 | 21 |

| DMU19 | 31 | 33 |

| DMU20 | 33 | 32 |

| DMU21 | 1 | 7 |

| DMU22 | 19 | 15 |

| DMU23 | 3 | 5 |

| DMU24 | 11 | 17 |

| DMU25 | 5 | 10 |

| DMU26 | 30 | 26 |

| DMU27 | 10 | 19 |

| DMU28 | 8 | 40 |

| DMU29 | 1 | 12 |

| DMU30 | 1 | 18 |

| DMU31 | 15 | 30 |

| DMU32 | 17 | 44 |

| DMU33 | 41 | 43 |

| DMU34 | 28 | 45 |

| DMU35 | 6 | 8 |

| DMU36 | 38 | 46 |

| DMU37 | 18 | 27 |

| DMU38 | 2 | 13 |

| DMU39 | 22 | 47 |

| DMU40 | 40 | 48 |

| DMU41 | 13 | 9 |

| DMU42 | 37 | 49 |

| DMU43 | 25 | 34 |

| DMU44 | 44 | 50 |

| DMU45 | 34 | 29 |

| DMU46 | 29 | 29 |

| DMU47 | 43 | 51 |

| DMU48 | 42 | 52 |

| DMU49 | 45 | 53 |

| DMU50 | 35 | 36 |

| DMU51 | 24 | 24 |

| DMU52 | 47 | 54 |

| DMU53 | 9 | 31 |

| DMU54 | 23 | 41 |

| DMU55 | 46 | 55 |

| DMU56 | 27 | 38 |

| DMU57 | 39 | 56 |

| DMU58 | 20 | 25 |

| DMU59 | 36 | 37 |

Table 2: American accelerator rankings.

Up to now, the performance and rating of each of the accelerators are derived separately from each of the models. Each of these accelerators has two performance levels and two ratings that are sometimes identical and sometimes differentiated in all two ways. But the most fundamental question in this section is, "What should each of these accelerators be attributed to, both performance and rank?" In order to answer this question, we need to say that for each accelerator, these two values of efficiency must be combined in such a way that, based on the combined efficiency, we arrive at a single ranking [11]. One of the methods that can effectively integrate performance values is the average arithmetic mean of the performance of the two models. Table 3 shows the unique performance of each accelerator, based on these performance values, the rank of each accelerator is obtained.

| Accelerator | Arithmetic mean | |

|---|---|---|

| Efficiency | Ranking | |

| DMU01 | 1 | 1 |

| DMU02 | 0.7163 | 8 |

| DMU03 | 0.9533 | 3 |

| DMU04 | 0.8542 | 4 |

| DMU05 | 0.4118 | 24 |

| DMU06 | 0.6028 | 12 |

| DMU07 | 0.7352 | 7 |

| DMU08 | 0.4537 | 21 |

| DMU09 | 0.6534 | 11 |

| DMU10 | 0.4572 | 20 |

| DMU11 | 0.9673 | 2 |

| DMU12 | 0.4478 | 22 |

| DMU13 | 0.1872 | 40 |

| DMU14 | 0.6684 | 10 |

| DMU15 | 0.406 | 25 |

| DMU16 | 0.2306 | 34 |

| DMU17 | 0.7432 | 6 |

| DMU18 | 0.2897 | 30 |

| DMU19 | 0.2224 | 35 |

| DMU20 | 0.1666 | 44 |

| DMU21 | 0.7566 | 5 |

| DMU22 | 0.3926 | 26 |

| DMU23 | 0.5713 | 13 |

| DMU24 | 0.4233 | 23 |

| DMU25 | 0.6761 | 9 |

| DMU26 | 0.2219 | 36 |

| DMU27 | 0.5015 | 18 |

| DMU28 | 0.306 | 28 |

| DMU29 | 0.5644 | 14 |

| DMU30 | 0.5041 | 16 |

| DMU31 | 0.2778 | 31 |

| DMU32 | 0.1885 | 39 |

| DMU33 | 0.0601 | 50 |

| DMU34 | 0.1243 | 46 |

| DMU35 | 0.5064 | 15 |

| DMU36 | 0.0554 | 51 |

| DMU37 | 0.305 | 29 |

| DMU38 | 0.5018 | 17 |

| DMU39 | 0.1568 | 45 |

| DMU40 | 0.0387 | 53 |

| DMU41 | 0.4768 | 19 |

| DMU42 | 0.0602 | 49 |

| DMU43 | 0.1956 | 38 |

| DMU44 | 0.021 | 56 |

| DMU45 | 0.1704 | 43 |

| DMU46 | 0.2093 | 37 |

| DMU47 | 0.0213 | 55 |

| DMU48 | 0.022 | 54 |

| DMU49 | 0.0145 | 57 |

| DMU50 | 0.1072 | 47 |

| DMU51 | 0.2494 | 33 |

| DMU52 | 0.0108 | 59 |

| DMU53 | 0.3231 | 27 |

| DMU54 | 0.1734 | 42 |

| DMU55 | 0.0109 | 58 |

| DMU56 | 0.1855 | 41 |

| DMU57 | 0.0485 | 52 |

| DMU58 | 0.2619 | 32 |

| DMU59 | 0.1045 | 48 |

Table 3: Performance and final ranking of American accelerators.

Table 3 is the final output of this study, which represents the final rating of the accelerators examined. By comparing the output of this ranking with a well-known ranking that evaluates them without considering the inputs of accelerators, interesting results are obtained.

Table 4 also shows the output of the 2018 Seed Accelerator Ranking Project (SARP), which ranked American accelerators from 2014 every year.

| Ranking | Accelerator |

|---|---|

| Platinum plus | AngelPad, Y Combinator, StartX |

| Platinum | Amplify LA, MuckerLab, Techstars, U. Chicago |

| Gold | 500 Startups, gener8tor, HAX, IndieBio, MassChallenge, SkyDeck, Alchemist, Dreamit |

| Silver | Brandery, Capital Innovators, REach, Zero to 510, Healthbox, Accelerprise, AlphaLab, Health Wildcatters, Lighthouse Labs, Tech Wildcatters, TMCx |

Table 4: Output of the 2018 Seed Accelerator Ranking Project (SARP).

Discussion

Among the accelerators in Table 4, the Techstar, HAX, Healthbox, Plug and Play and Zero to 510 accelerators were omitted from our analysis due to trans regional activity and lack of focus on the United States and in another article that will rank the world's accelerator will be considered. StartX, U.Chicago, IndieBio, MassChallenge, R/GA, SkyDeck, REach, FoodX accelerated our analyzes because of their incomplete information on the analytical network (seed-db.com). Finally, 59 top American accelerators were tested. There are a lot of differences between the SARP ranking and our ranking, and there are a number of them due to the high number of ranked accelerators [12]. The AngelPad, Y Combinator accelerators, which are ranked Platinum plus in the SARP ranking project, ranked 1 and 2 in Table 4, we also ranked above 1 and 3 Which represents a high performance of these accelerators. But surprisingly, the upland labs, Portland Incubator Experiment (PIE), which was not assigned to Table 4, won our third and sixth rankings in our ranking.

Also, Brandery, Dreamit, which ranked silver in the silver rank, ranked 4th and 5th in our evaluation. The reason for this can be found in the ratio of successful outcomes and increasing capital to the number of small startups and handicrafts that somehow represent a performance. Platinum accelerators ranked top notch in the 4th rank in our ranking and ranked 12, 21 and 24, which again showed that is, although the outputs of these accelerators are high, but the output is low in the input. By examining two tables 3 and 4 and comparing rankings, inputs and outputs, many differences can be observed. These differences indicate that although the SARP ranking is the world's first and most comprehensive accelerator rating, it annually ranks US accelerators, but because of the criteria for output and the lack of attention to inputs. The system can have errors in providing the best accelerators. In the future papers, the method described in this article can measure the performance of Iranian accelerators and also accelerators in the world, which would be a good measure for this new incubator style of startups.

Conclusion

In today's world, markets are changing rapidly, on the one hand, based on ideas and innovations and on the other hand, many people around the world have realized their dreams of ownership and business startups. These dreams are not only the most important factor of economic development, but also require special attention, but if an entrepreneurial dream cannot be organized well, it can lead to failure and suffer a high financial and human cost to the entrepreneur and his life. Entrepreneurship ecosystem has experienced different growth and incubation models for novice businesses so far and now the starter accelerator model is being tested and tested. This new style of incubation, if not well-evaluated, can turn into a ground for failure. In this paper, we evaluated the performance of the top American accelerators, based on data envelopment analysis models, for each accelerator, two performance values and two rankings. In order to achieve the efficiency and the unique rank, the mean of the arithmetic of performance values was used and the efficiency and final rank of each were calculated as a unique number. The results of the comparison showed that there are many differences between the SARP ranking, the world's first and most comprehensive ranking of accelerators and the ranking provided in this article. Particularly, some accelerators such as the Upwest Labs, the Portland Incubator Experiment (PIE) are ranked very high in our ranking, while the above-mentioned accelerators in the SARP ranking have not been able to afford Give. And again, we saw platinum, which ranked the highest in the SARP ranking, ranked below the rankings of this article. Other differences can also be found by comparing the ranking of this article and the SARP ranking. In particular, the reason for this difference can be seen not only in the criteria but in the assessment method. The SARP ranking will only rank their accelerator outputs and each accelerator that has more output will have a higher rating. This, while for a comprehensive evaluation of a single unit, should be used for its performance, i.e., the ratio of output to input for ranking. Therefore, this research once again showed that taking output only cannot be a suitable method for evaluating the performance of a single unit, as a small unit with low output and input, may be higher than a large unit with very large input and output volumes. This applies to accelerators and can be offered as a suggestion to evaluate the performance of Iranian accelerators and to modify the SARP evaluation process. In our future papers, in addition to extending the scope of performance evaluation of units to global and Iranian accelerators, we will use other developed methods of data envelopment analysis, including analyzing data hierarchy based data coverage. It is also possible to consider the types of uncertainties in input and output data as the future direction of research in this field.

References

- Banker RD, Charnes A, Cooper WW. Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manag Sci. 1984;30(9):1078-1092.

- Charnes A, Cooper WW, Rhodes E. Measuring the efficiency of decision making units. Eur J Oper Res. 1978;2(6):429-444.

- de Tienne DR. Entrepreneurial exit as a critical component of the entrepreneurial process: Theoretical development. J Bus Ventur. 2010;25(2):203-215.

- de Clercq D, Fried VH, Lehtonen O, Sapienza HJ. An entrepreneur's guide to the venture capital galaxy. Acad Manag Perspect. 2006;20(3):90-112.

- Hackett SM, Dilts DM. A systematic review of business incubation research. J Technol Transfer. 2004;29(1):55-82.

- Fort TC, Haltiwanger J, Jarmin RS, Miranda J. How firms respond to business cycles: The role of firm age and firm size. IMF Econ Rev. 2013;61(3):520-559.

- Haltiwanger J, Jarmin RS, Miranda J. Who creates jobs? Small versus large versus young. Rev Econ Stat. 2013;95(2):347-361.

- Hess KL, Jewell CM. Phage display as a tool for vaccine and immunotherapy development. Bioeng Transl Med. 2020;5(1):e10142.

- St-Jean E, Audet J. The role of mentoring in the learning development of the novice entrepreneur. Int Entrep and Manag J. 2012;8:119-140.

- Husain N, Abdullah M, Kuman S. Evaluating public sector efficiency with Data Envelopment Analysis (DEA): A case study in road transport department, Selangor, Malaysia. Total Qual Manag. 2000;11(4-6):830-836.

[Crossref] [Google Scholar] [PubMed]

- Wiggins J, Gibson DV. Overview of US incubators and the case of the austin technology Incubator. Int J Entrep Innov Manag. 2003;3(1-2):56-66.

- Wennberg K, Wiklund J, de Tienne DR, Cardon MS. Reconceptualizing entrepreneurial exit: Divergent exit routes and their drivers. J Bus Ventur. 2010;25(4):361-375.

Citation: Nasab SSH (2024) Designing a Model for Evaluation and Classification of Startup Accelerators Using Data Envelopment Analysis Method. Review Pub Administration Manag. 12:473.

Copyright: © 2024 Nasab SSH. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.